The only thing harder than developing a WebRTC application is testing it. When our team at WebRTC.ventures started building live video applications five years ago, most of the work we did for clients and ourselves were essentially prototypes. The success metric was an alpha or beta phase with potential customers, or simply “demo ware” that could be shown to potential investors in order to secure the funding to build the full-scale application. My, how times have changed!

Five years ago, WebRTC was still not a proven technology and the development options were limited. Outside of San Francisco, the world was still not enthusiastic about remote meetings or remote work. And many in industries like healthcare mocked the idea that some in-person visits could be replaced with a secure video chat.

Over the last few years, that mindset has changed from, “I just need a prototype” to, “I need a production-ready application that scales well as we grow.” Like many other aspects of video development, 2020 only accelerated an existing trend. Now those who previously dismissed the idea of remote work are among those asking for production-ready applications.

Two basic elements to building a scalable WebRTC application

- Choosing the right architecture. The correct architecture will vary based on your specific use case. More and more often, we are having early discussions with our customers about their end-goals to make sure they select an architecture that best suits their long-term needs. For a variety of reasons, we may still go with an initial Phase 1 architecture that is easier/cheaper to build, but less scalable. But we need to be at least aware of what the Phase 2 architecture should be to achieve the long term goals. These choices include what type of media server to use, or which CPaaS or open source platform you may want to base your video application on.

- Coming up with a testing plan. Regardless of your architectural choices, the work doesn’t stop there. A production-ready app needs to work on a variety of platforms, in different hardware and network configurations, and at various levels of user load. Testing everything is not a financially viable option in most cases, so you have to consider your use case carefully and apply the right layers of testing to that application. I will focus on this decision for the rest of this blog post.

Agile Software Engineering & Testing

Testing a software application is not easy. It’s not simply about hiring a “10x Developer” and assuming that an awesome developer means awesome code. Entire books have been written on this topic. Many consultants make a living teaching about the importance of testing and how to balance manual and automated testing of applications. When I was a consultant and trainer of agile methodologies, I regularly talked with my clients about agile engineering techniques and how to integrate them into their software development life cycles. It always sounds easier than it is in practice.

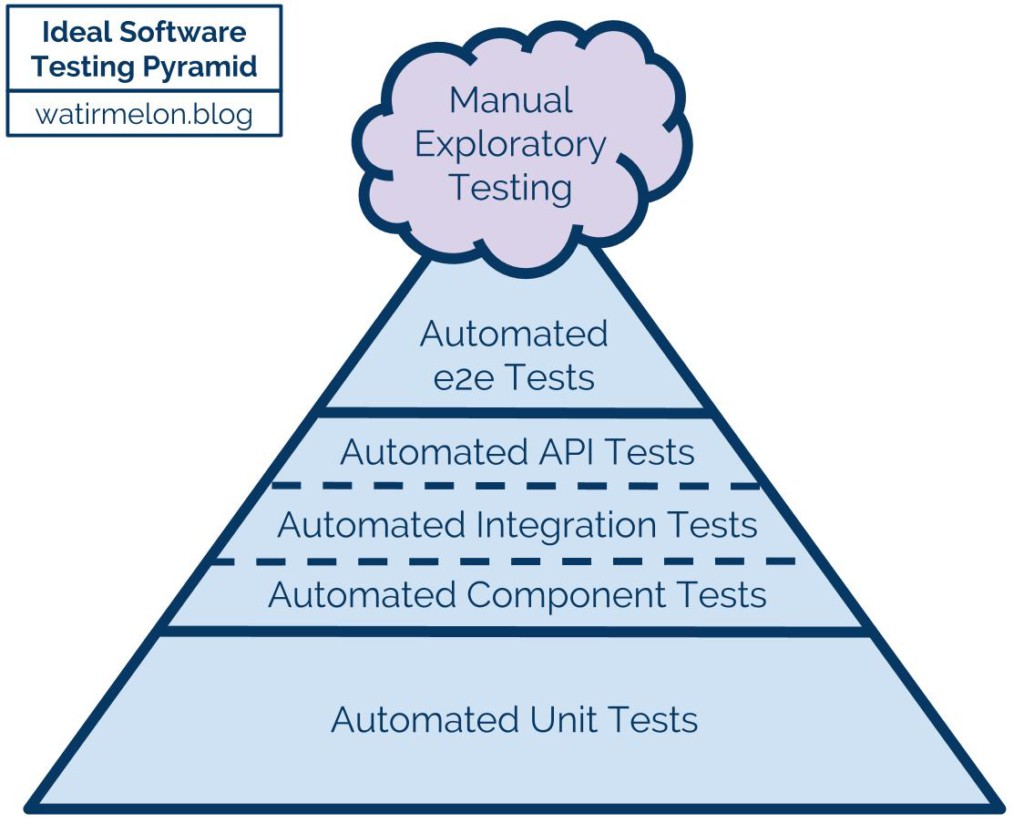

One of my favorite diagrams to explain the different layers of testing in agile teams is this testing pyramid from Alister B. Scott:

I recommend reading his post on the different types of pyramid diagrams he uses to talk about patterns and antipatterns in agile testing. For our purposes, it’s enough to summarize the above diagram as saying that agile teams will do most of their automation work at the lowest level of an application (unit tests) because those tests require the least amount of maintenance work to keep them viable and running fast. As an agile developer moves up the stack, they will also automate aspects of the GUI using tools like Selenium and Cucumber, but without trying to achieve 100% code coverage since those sorts of tests take longer to run and are more brittle and harder to maintain. But they still provide tremendous value to the manual testers, since they are now freed up to only do exploratory testing if all the “boring tests” are automated.

Formalizing Testing for WebRTC Applications

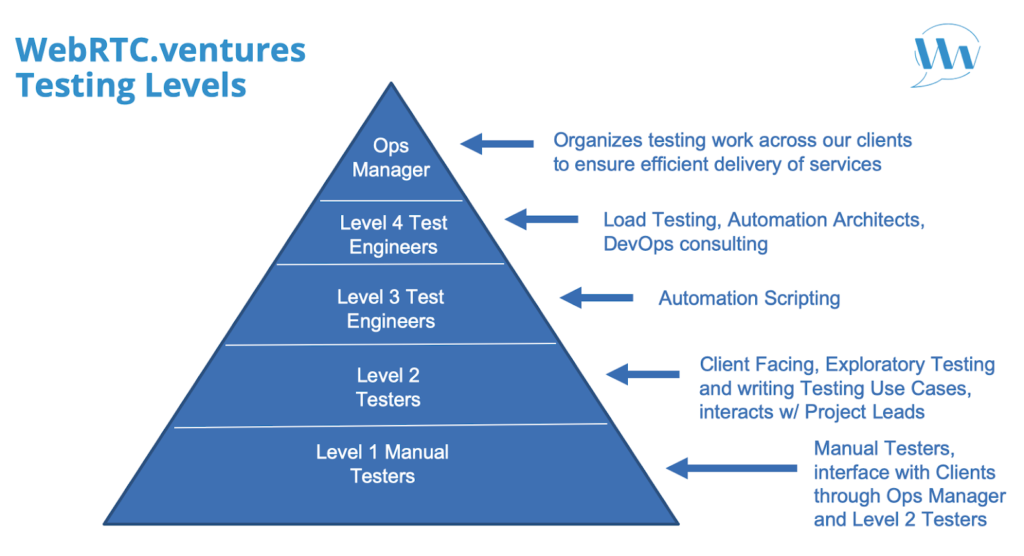

As the industry moves more from prototypes to production applications, we are formalizing our testing services here at WebRTC.ventures. With a tip of the hat to Alister’s testing pyramids, we have described our own testing pyramid below for our development clients.

This pyramid is organized more around the hierarchical levels of testing in our team and how they interact with our clients, as opposed to showing anything about the relative volume of tests to be executed.

Let’s work our way up through this diagram to explore the different levels of testing we can provide for your video application:

Level 1 – Manual Testing

The base of the pyramid are our Level 1 Manual Testers. These testers have access to a lab of different mobile devices and computers so that they can test applications in a variety of different browsers and operating systems. These testers can also use tools such as BrowserStack for browser compatibility testing. However, the tools commonly used for web apps don’t generally accommodate video call testing. More manual testing is called for in these circumstances. Our manual testers will follow test scripts developed for them by the Level 2 Testers.

We generally charge our clients for this device lab testing on an hourly basis. Or, we build in a certain volume of these tests for ongoing development clients. Because Level 1 Testers are following a script written by others, they can multitask across all of our clients as needed.

Level 2 Testing – Exploratory and Use Cases

Our Level 2 Testers are also manual testers, but they have more direct knowledge of our clients’ applications. Level 2 Testers are how we primarily performed testing in the past. A tester is dedicated to only one or two different clients, so that they get to know that client’s needs very well. They participate in Scrum team meetings and are client-facing, meaning they work with the client directly to better understand how a feature should work so that they can test it. Level 2 Testers may be involved in testing the full application or only the video portion, depending on the nature of our contract with the client. (Sometimes we augment an existing product team just to build their video solution; other times we build the full application.)

Because our Level 2 Testers have the most knowledge about each client’s product, they do most of the exploratory and creative testing work. They will also document that knowledge via writing test cases, which are shared up and down the pyramid with Level 1 and Level 3 Testers to leverage that knowledge across our broader development team.

Level 2 Testers are generally built into our development contracts. As part of a monthly fee paid by the client, a specific Level 2 Tester will be dedicated either part-time or full-time to work alongside our developers in a very agile fashion.

Level 3 Test Engineers – Test Automation

Level 3 Test Engineers are part tester, part developer, and part DevOps engineer. These members of our team will produce test automation scripts and continuous development environments that allow an automated suite of tests to run against your application in a production-like environment. The scripts are written using GUI level automation tools such as Selenium so that they can be based on scripts provided by Level 2 Testers, and will automate “happy paths” and multiple scenarios across your application.

A “happy path” is a path through your system which tests a variety of functionality at a high level. It is not intended to be a comprehensive code coverage test that executes 100% of your functionality all the time. Those sorts of tests are very time consuming and expensive to maintain, so we don’t find them to be very effective for our client base.

At an introductory level, these tests perform like a “smoke test,” – a minimal test shows that the system is up and running. In a common video application scenario, it should confirm that the web application loads, that a typical user can login, and that the user can start a video call. In a limited test like this, we have automated a simple test that confirms all parts of the application architecture are functioning, including our video media servers. This type of test can be run after every code deployment as a quick sanity check that the code deployment didn’t crash the site. It can also be scheduled to run regularly against production as a way of confirming system up-time.

A more complicated suite of automated tests can test your application across different use cases, different customer journeys, and even test browser compatibility and video quality using tools like Kite.

Level 3 Test Engineers are not included in our standard development contracts. For clients interested in the extra assurance provided by test automation, we can provide this for an additional fee. It can also be a standalone service for a system a client has built themselves. Contact us for a quote on this service for your application.

Level 4 Test Engineers – Load Testing and Advanced DevOps

The test automation provided by our Level 3 Test Engineers is an excellent way to automate reliability into your system. But it doesn’t give you an insight into how many users your system can scale to. We regularly have customers ask us: “how many video calls will this server configuration support?” While we can use public data to provide some generic predictions based on their use case and application architecture, the only way to definitely answer that question is load testing.

Our Level 4 Test Engineers provide a variety of DevOps consulting and load testing services to clients with the most demanding requirements for their production applications. These team members can assess your current architecture and recommend improvements to allow it to auto-scale as the number of users grow. To confirm system performance under load, they can also build on top of automation scripts like those developed by our Level 3 Test Engineers, and deploy those scripts to server farms to similar large numbers of calls against your application. During a load test, they can assess the load on your servers and the quality of video calls on the system to help determine what the upper bounds on your application architecture may be.

The limit of a load test is only bound by your interest in paying for the server resources to conduct that test. Our Level 4 Test Engineers can design and implement a load test for a system that we have built for you, or your own existing system. These fees are not built into our standard development contracts, so contact us for a quote on performing load tests for your applications.

The Ops Manager – Holding it all together

At the top of our organizational pyramid is our Testing Operations Manager, who ensures that our portfolio of testing staff and device lab resources are being utilized across our clients most efficiently. Some of our development clients will have access to these testing services as part of their development contracts. Others will contract for only one or more of the layers of our testing services, and the Ops Manager is responsible for coordinating and managing those requests across clients.

How we fit in

Our team at WebRTC.ventures has been building applications since 2010, with a focus on video applications since 2015. In that time, we have worked primarily as a remote team with developers all around North and South America, serving clients globally. Live video is a part of our DNA.

As we formalized our testing services, however, we decided to build up our first brick and mortar office in Panama City, Panama. This office is focused on providing each layer of the testing pyramid described above, most especially the Level 1 and Level 2 Testers who require access to our on-site mobile device laboratory. Our Ops Manager, Rafael Amberths, leads that office and coordinates the work across our testing team members.

Building and testing the next generation of live video apps

The video naysayers may have been silenced by the incredible growth and acceptance of video apps and remote work during the coronavirus pandemic. But that doesn’t mean that all is rosy in video application development.

Developing, testing, and deploying custom live video applications is still hard. This is why we’re taking our years of pre-pandemic experience doing this for clients and formalizing it into a set of testing services that are ready-made for the post-pandemic world of live video applications. Testing your WebRTC video application is not as simple as buying a single tool or adopting a single methodology. It requires blending together a variety of techniques and expertise that most teams don’t have. We’re working on making it easier for you to tap into our expertise!

Do you need an experienced development and testing team to deploy your custom live video application using WebRTC? Contact us today!